Second HAREM: Evaluation

HAREM, Linguateca

Em português

The NEs can have three different scores: correct, missing or spurious as they are being aligned to the NEs in the Golden Collection (GC).

In Second HAREM the measure of combined semantic classification will be used.

In general, a NE in the GC can be vague between several interpretations (N).

The systems' outputs can also have vague NEs, which can give rise to spurious classifications (M).

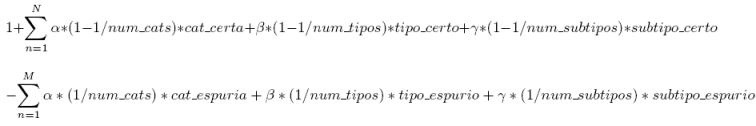

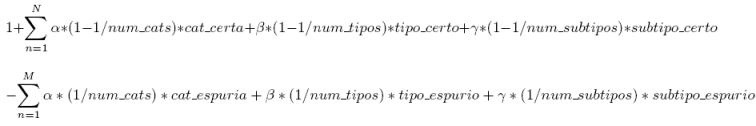

Each NE will be evaluated by the following equation:

num-cats = number of possible values for the particular CATEGory (10 in the complete scenario, but can be less in selective scenarios)

num-tipos = number of possible values for TIPO for the given CATEGory

num-subtipos = number of possible values for SUBTIPO for the given pair CATEG/TIPO.

cat-certa = 1 (if CATEG is correct) or 0 (if CATEG is wrong)

cat-espuria = 1 (if CATEG is spurious) or 0 (if it's not)

tipo-certo = 1 (if TIPO is correct) or 0 (if TIPO is wrong)

tipo-espurio = 1 (if TIPO is spurious) or 0 (if it's not)

subtipo-certo = 1 (if SUBTIPO is correct) or 0 (if SUBTIPO is wrong)

subtipo-espurio = 1 (if SUBTIPO is spurious) or 0 (if it's not)

α, β and γ are parameters to be adjusted later,

corresponding to different weights for CATEGories, TIPOs and SUBTIPOs.

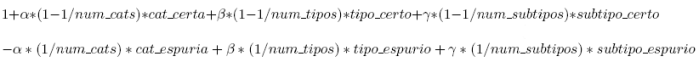

If a NE is neither vague in the GC nor in the system's output, the previous equation can be simplified to:

In addition, besides this measure for the correctly identified NEs, the number of missing and spurious NEs will also be collected.

The metrics (corresponding to the aggregation of the values of measures for all the NEs) will be the usual::

Precision

Precision measures the quality of the system's output: it is the proportion of correct answers in all answers returned by the system.

Precision = Number of correctly classified NEs / Number of NEs classified by the system

Precision = Σ score obtaint by each NE / Maximum score if all system classifications were correct

Recall

Recall measures the proportion of solutions in the GC that the system was able to reproduce.

Recall = Number of correctly classified NEs / Number of classified NEs in the GC

Recall = Σ score obtained by each NE / maximum score in the GC

F-measure

The F-measure combines precision and recall.

F-measure = (2 * Precision * Recall) / (Precision + Recall)

Overgeneration

Overgeneration measures the proportion of spurious results in the system's output.

Overgeneration = Number of spurious NEs / Number of classified NEs

Undergeneration

Undergeneration measures the number of analyses that are lacking, compared to the GC.

Undergeneration = Number of missing NEs / Number of NEs in the golden collection

- Given the new syntax of the Second HAREM, the First HAREM measures concerning identification and classification by categories correspond now to the execution of filters (in the first case, selecting no categories and, in the second, selecting no types).

- The measure for semantic classification just presented is nothing more than the generalization and improvement of the CSC used in the First HAREM, systematically taking into account the possibility that systems produce more than one classification per NE. See a detailed example of vague NEs evaluation.

- Selective scenarios are also present in the Second HAREM, and systems can choose to classify only a subset of the CATEGories or only CATEG and some or none TIPOs or SUBTIPOs. In this last case, the measures are relative to the chosen subset, and are obtained after application of the respective filters.

- Contrary to what we did in the First HAREM, partially identified NEs will not be considered correct. These NEs will thus be treated as incorrect -- which means they will be simultaneously considered spurious (identified by the system, but not present in the GC) and the NEs in the GC will be considered missing.

- In the Second HAREM, the ALT tag, besides being used to indicate an assystematic set of alternatives, will also be used to indicate different equally valid possibilities for the classification of a piece of text. See a (uncomplete) list of classifications where ALT should be used. Participants are strongly encouraged to use this tag also in their systems' output. In addition to the evaluation method used in First HAREM (no ALTs in the participations) which we call relaxed mode, we will also offer a strict evaluation, described in ALT evaluation in different scenarios.

- Note that the CSC measure allows scoring separately the three following conceptually different and differently marked cases: ignorance (unfilled attributes), certainty of being different (using the "OUTRO" attribute) and mistake (classification different from the one in the GC).

- If a given CATEGORIA or TIPO has no SUBTIPOs, it is the same as having only one SUBTIPO: OUTRO. This makes the multiplicative factor in the CSC equation 1-1 = 0.

Last update: 1 April 2008.